Photo: Gerd Leonhard/Flickr (CC BY-SA 2.0)

Artificial intelligence. Everyone talks about it, many pretend to understand it, but how many of us truly measure its disruptive potential? Without being as alarmist as Yuval Noah Harari—who identifies the rise of AI and bioengineering as an existential threat to human kind on a par with nuclear war and climate change—the potential for AI to affect how we lead our lives is very real, for better and for worse.

It’s clear that regulating the development of such disruptive technology must start now, and many initiatives are already emerging within companies and civil society to guide the “ethical” development and use of AI. But why are we talking about ethics rather than law and human rights?

The potential impacts of AI

AI technology holds incredible promise for driving social progress, but it can also undermine fundamental rights and freedoms. A frequently cited example is discrimination arising from algorithmic bias, where patterns of human bias inherent in the historic data used to train an AI algorithm are embedded and perpetuated by the system. Our privacy is also at risk of increased surveillance, and recent scandals have highlighted the potential for malicious “bots” (producing fake content) and AI-powered social media algorithms (promoting popular content) to facilitate the spread of disinformation.

Ethical guidelines for AI

Many voices have called for a human rights approach to AI regulation, culminating notably in the Toronto Declaration on protecting the right to equality and non-discrimination in machine learning systems (May 2018), which was promisingly heralded as “a first step towards making the human rights framework a foundational component of the fast-developing field of AI and data ethics”.

However, the discussion to date has predominantly been framed in terms of “ethical” guidance rather than a legal or rights-based framework. In a geopolitical context of intense technological competition between Europe, the United States and China, the challenge is to devise a regulatory framework that controls potential excesses without stifling innovation. This competitive pressure not to constrain innovation may explain the preference for more general “ethical” guidelines.

This is not so surprising with voluntary industry initiatives: major AI players including Google, Microsoft and IBM have published ethical principles for the development of their AI technology.

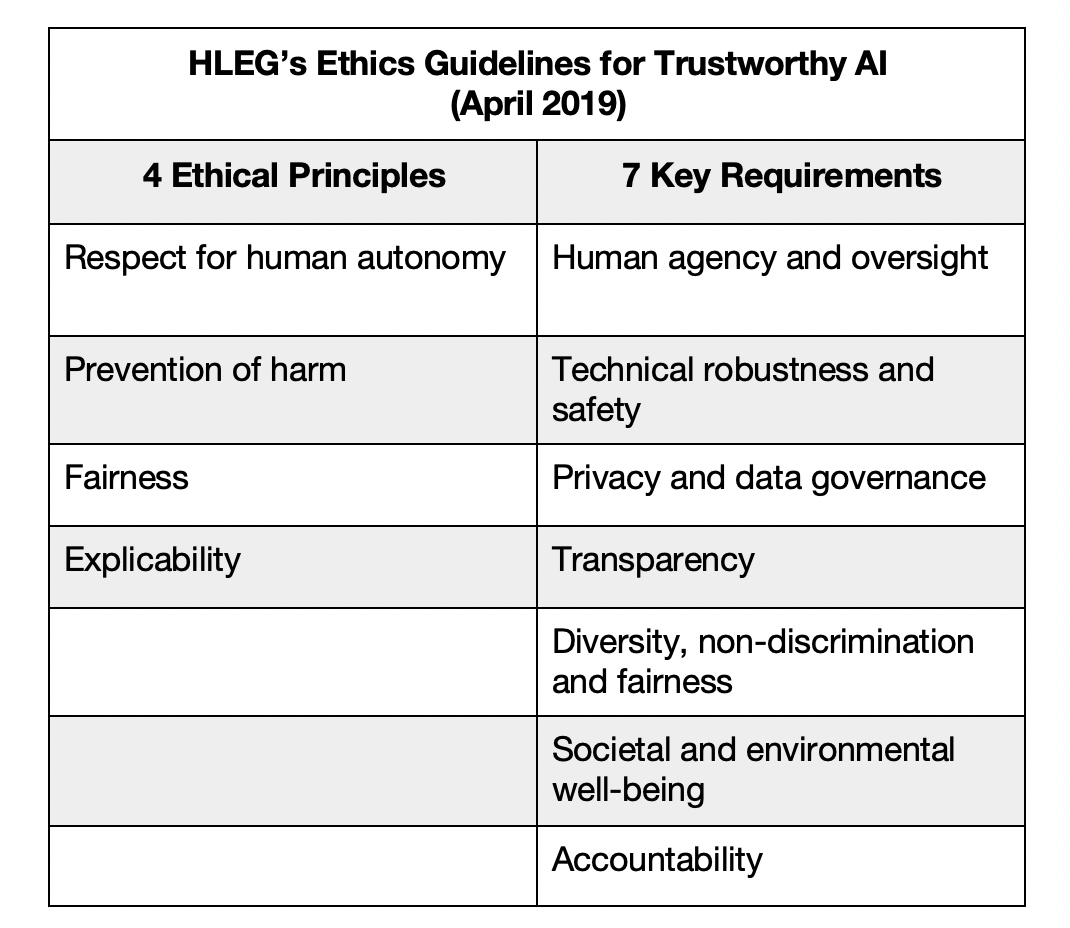

However, support for an “ethical” framework was recently reaffirmed by the European Commission’s High Level Expert Group on Artificial Intelligence (HLEG). Following an extensive consultation, the HLEG published “Ethics Guidelines for Trustworthy AI” (the “EU Guidelines”) designed to guide the AI community in the responsible development and use of “trustworthy” AI, defined as AI that is lawful, ethical and robust. Although the EU Guidelines are “based” on the fundamental rights enshrined in European and international law, they are founded on four ethical principles to be realized through seven key requirements, which sometimes echo human rights concepts but have little predefined meaning.

Indeed, ethical concepts like fairness and transparency are vague and culturally relative, leaving the door open to variable interpretations and levels of protection. The EU Guidelines acknowledge the "many different interpretations of fairness”, while Google’s AI Principles “recognize that distinguishing fair from unfair biases […] differs across cultures and societies”. How can such subjective notions of “fairness” tell us what risks to individuals are outweighed by the societal benefits of facial recognition? Or when a company should be held accountable for failing to sufficiently reduce unfair bias in its algorithms? And how useful are culturally relative principles to guard the development of technology that is global in reach?

Although the EU Guidelines recognize the global reach of AI and encourage work towards a global rights-based framework, they are also expressly intended to foster European innovation and leadership in AI. In that light, the language of ethics may have been more encouraging than talk of human rights protection. According to the HLEG, the priority was speed, in order to keep pace with and inform the wider AI debate, and the EU Guidelines were not intended as a substitute for regulation (on which the HLEG was separately tasked with making recommendations to the EU Commission – see its “Policy and Investment Recommendations” published on 26 June 2019).

But if we agree that AI must be regulated (through existing or new laws), this seems a missed opportunity for the EU to have framed the upcoming regulatory debate in human rights terms.

A human rights-based approach?

It is of course encouraging that ethical principles are being adopted with support from the AI industry. But a human rights-based approach would offer a more robust framework for the lawful and ethical development and use of AI.

Human rights are an internationally agreed set of norms that represent the most universal expression of our shared values, in a shared language and supported by mechanisms and institutions for accountability and redress. As such, they offer clarity and structure and the normative power of law.

For example, human rights law provides a clear framework for balancing competing interests in the development of technology: its tried and tested jurisprudence requires restrictions to human rights (like privacy or non-discrimination) to be prescribed by law, pursue a legitimate aim, and be necessary and proportionate to that aim. Each term is a defined concept against which actions can be objectively measured and made accountable.

The EU Guidelines suggest that adherence to ethical principles sets the bar higher than formal compliance with laws (which are not always up to speed with technology or may not be well suited to addressing certain issues), but the reality is that ethics are much more easily manipulated to support a given company or government’s agenda, with limited recourse for anyone who disagrees.

Human rights offer a holistic framework for comprehensively assessing the potential impact of a disruptive technology like AI on all our rights and freedoms (civil, political, economic, cultural and social), leaving no blind spots.

Further, they come with the benefit of widely accepted and tested standards of best practice for protecting and respecting human rights, including the UN Guiding Principles (UNGPs). For example, human rights due diligence under the UNGPs includes communicating on how impacts are identified and addressed, the importance of which is magnified when developing a technology that is poorly (if at all) understood by most people. Affected right holders must also have access to effective remedy. This is being discussed in the context of AI, for example in relation to the “right to an explanation” of automated decision-making under the EU GDPR. But it is still unclear what effective remedy for AI impacts should look like, which the UNGP criteria (legitimate, accessible, predictable, equitable and transparent) could help guide.

This is not to say that the ethical conversations and guidance that have emerged should be discarded. To the contrary, this should be built on with the involvement of human rights practitioners to ensure we continue to work towards an enforceable global legal framework for the regulation of AI technology that is sufficiently adaptable to a future we have yet to imagine.